How AI transforms Open Innovation

Practical strategies and risks for innovation leaders embedding AI across the Open Innovation lifecycle.

Previously on Open Road Ventures: we had Carlo, explaining the universal pattern of technological innovations. Catch up here!

Why AI and Open Innovation?

For years, innovation teams have treated AI as an add-on: useful for scraping patent data, maybe speeding up some desk research or for things like pitch analysis. That era has passed. What we’re witnessing now is a shift in architecture: open innovation evolves with AI at its core.

Firms now have access to a new class of AI tools that supercharge open innovation. They can tap into platforms like Patentplus to scout technologies and partners across ecosystems, use natural language models (e.g. ChatGPT) to mine partner documentation for opportunity signals, or deploy agentic tools like Cicero to structure, negotiate, and govern collaborative alliances.

Across industries, from pharma to utilities, companies are weaving generative agents, copilots, and RAG systems into the very heart of their innovation workflows. Scouting becomes a graph. Evaluation becomes a multi-agent dialogue. And for firms that embrace this, the payoff is clear: faster sprints, better fit, and more shots on goal.

But there’s risk, too: strategic flattening, trust erosion, IP fog. So the question moves beyond whether to blend AI into open innovation. The focus turns to doing it consciously, capably, and competitively.

This article offers a playbook: where AI shifts the game, where it strains it, and how to steer the transformation.

From “open” to “open + AI”: what actually changes

Let’s anchor on definitions.

Open innovation (OI) means structured collaboration between an organization and external players (startups, universities, consortia, even competitors) to accelerate R&D, access novel capabilities, and de-risk early-stage bets. What once resembled a funnel now functions as a network.

Artificial intelligence (AI), in this context, includes both predictive models (for sensing trends, assigning scores, or forecasting outcomes) and generative systems (for ideation, summarization, coding, simulation). When combined with multi-agent architectures, these tools move beyond support roles, they also shape outcomes.

Here’s what shifts in the OI game:

Speed: scouting, synthesis, or evaluation move from weeks to minutes.

Breadth: AI reveals long-tail signals (patents, founders, papers) that often remain hidden.

Signal-to-noise: generative clustering surfaces what matters, early.

Experiment cost: simulation tools reduce the price of “what if” scenarios.

Reuse: past decks, notes, and insights become accessible and remixable.

Bottom line: AI adds automation, creativity and intelligence across the open innovation lifecycle.

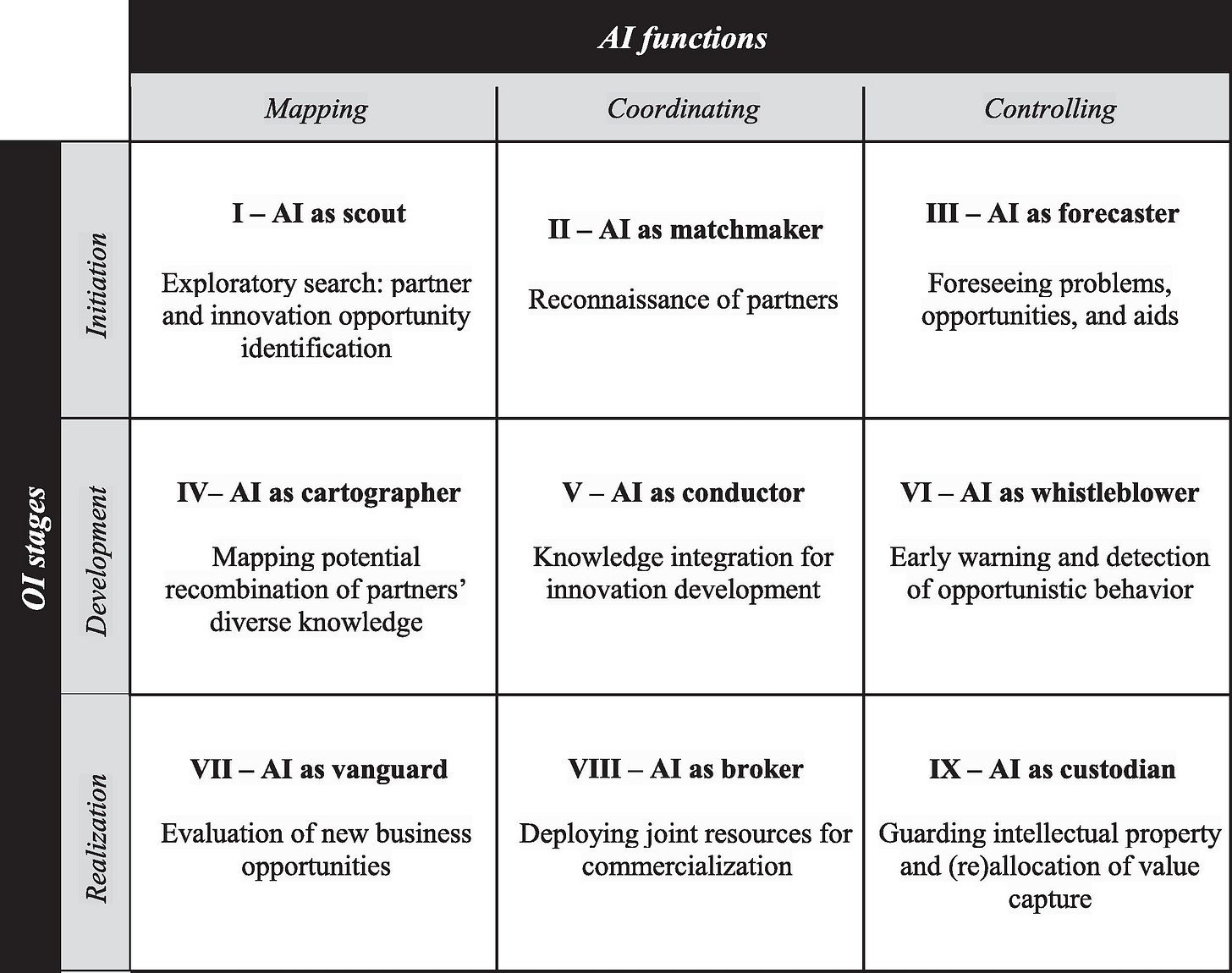

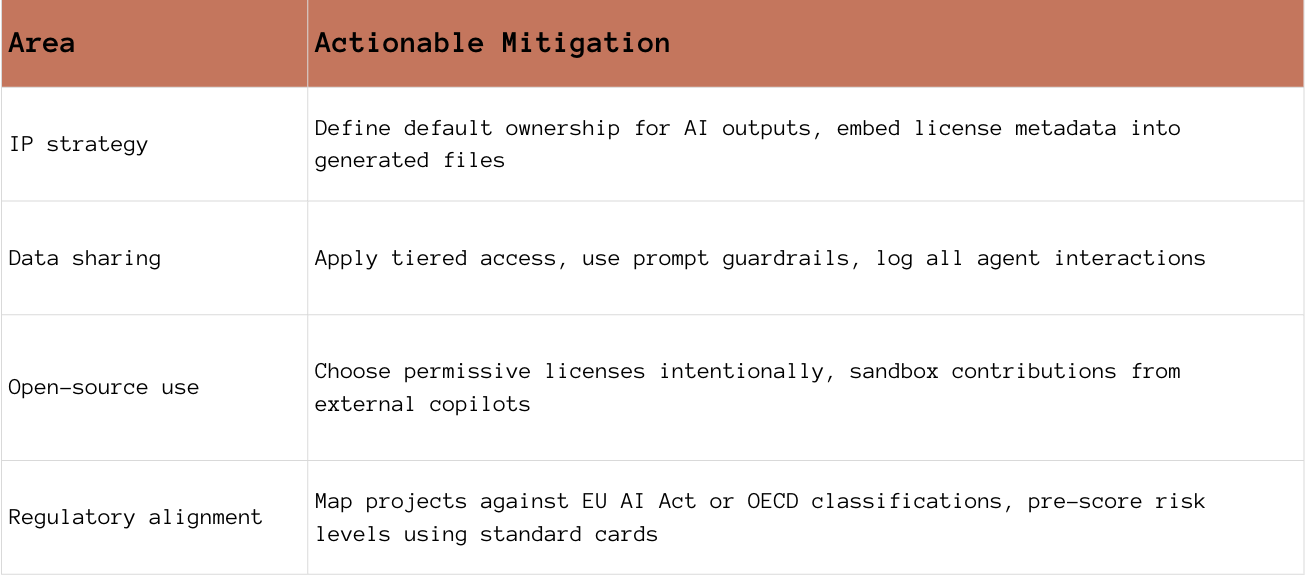

The AI × Open Innovation Value Chain

The University of Cambridge’s IfM proposed a helpful typology of AI roles in open innovation, from AI as scout to custodian. Their framework classifies AI’s contribution across three functions (mapping, coordinating, controlling) and three OI stages (initiation, development, realization). It’s a powerful mental model to frame how tools, agents, and models distribute across the value chain.

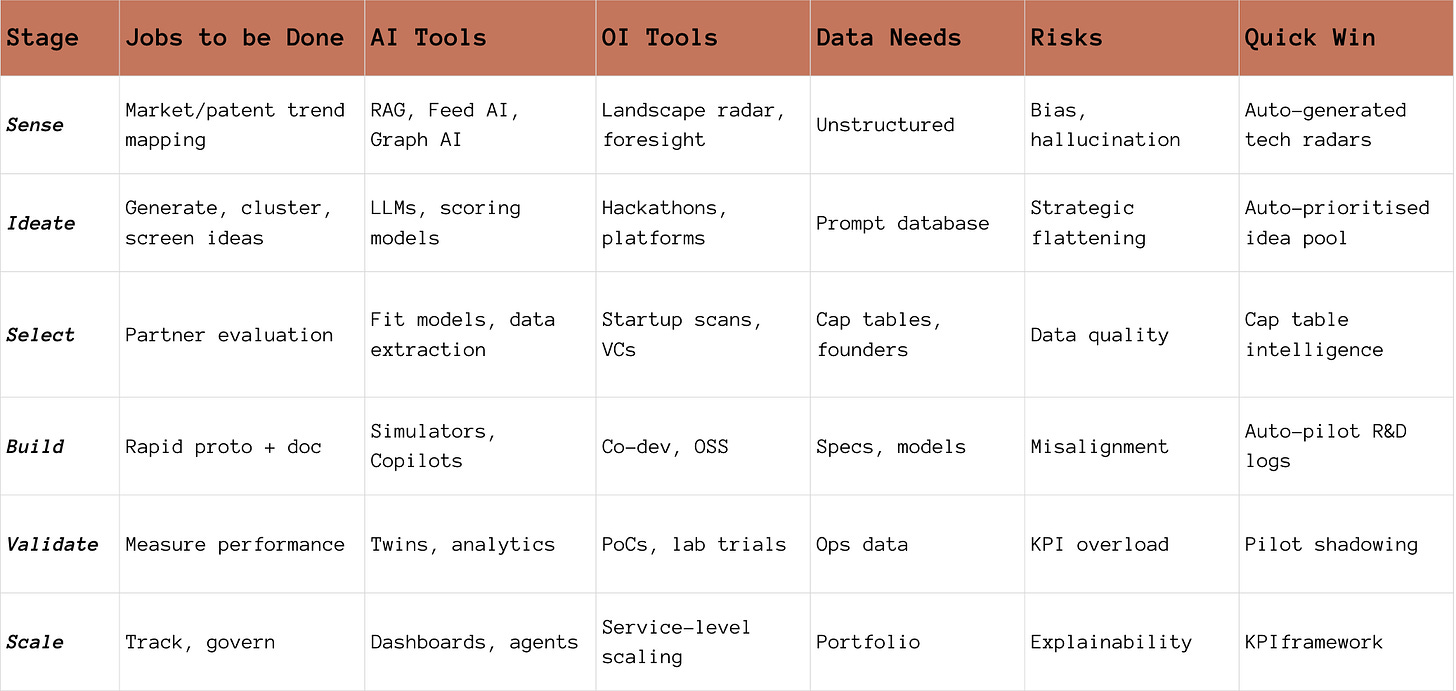

You can also think of open innovation as a value chain with six interlinked stages: sense, ideate, select, build, validate, and scale. AI now plays a distinct role in each. But this is more than automation. It’s orchestration.

Each stage calls for a different blend of tools, data, collaboration models, and decision frameworks. What follows is a field-tested map for how AI can enable (and elevate) each phase of the journey.

1. Sense

Jobs: spot trends, weak signals, adjacent threats.

AI: Retrieval-augmented generation (RAG), feed monitoring, semantic clustering.

OI Tools: foresight hubs, startup ecosystems, tech scouts.

Quick win: build a dynamic landscape radar that updates weekly using open data + internal queries.

2. Ideate

Jobs: generate, cluster, and evaluate ideas.

AI: LLMs for ideation, prioritization models, semantic similarity search.

OI Tools: hackathons, prompt labs, idea marketplaces.

Quick win: run a two-hour generative workshop using past pitch decks as input fuel.

3. Select

Jobs: find and assess the right partners.

AI: cap table analytics, founder scoring, automated due diligence.

OI Tools: venture clienting, VC syndicates, startup studios.

Quick win: deploy AI agents to score founder-market fit based on experience data (like a2a).

4. Build

Jobs: prototype, document, co-develop.

AI: code copilots, legal assistants, design simulation tools.

OI Tools: OSS projects, joint ventures, digital twins.

Quick win: use legal copilots to draft NDAs and term sheets with fallback clauses.

5. Validate

Jobs: test concepts, simulate scenarios, measure early KPIs.

AI: digital twins, process analytics, shadowing agents.

OI Tools: labs, pilots, regulatory sandboxes.

Quick win: automate pilot reporting and payment milestones via agent workflows.

6. Scale & Govern

Jobs: govern portfolio, ensure alignment, manage risk.

AI: scenario modeling, foresight engines, agent orchestration.

OI Tools: internal venture boards, open platforms, federated models.

Quick win: run a quarterly portfolio foresight using AI to map readiness, signals, and blockers.

The result? Less guesswork, more visibility. And a governance model that adapts as fast as the ideas do.

A useful mapping tool (I love matrices)

What brings this to the next level? Agents.

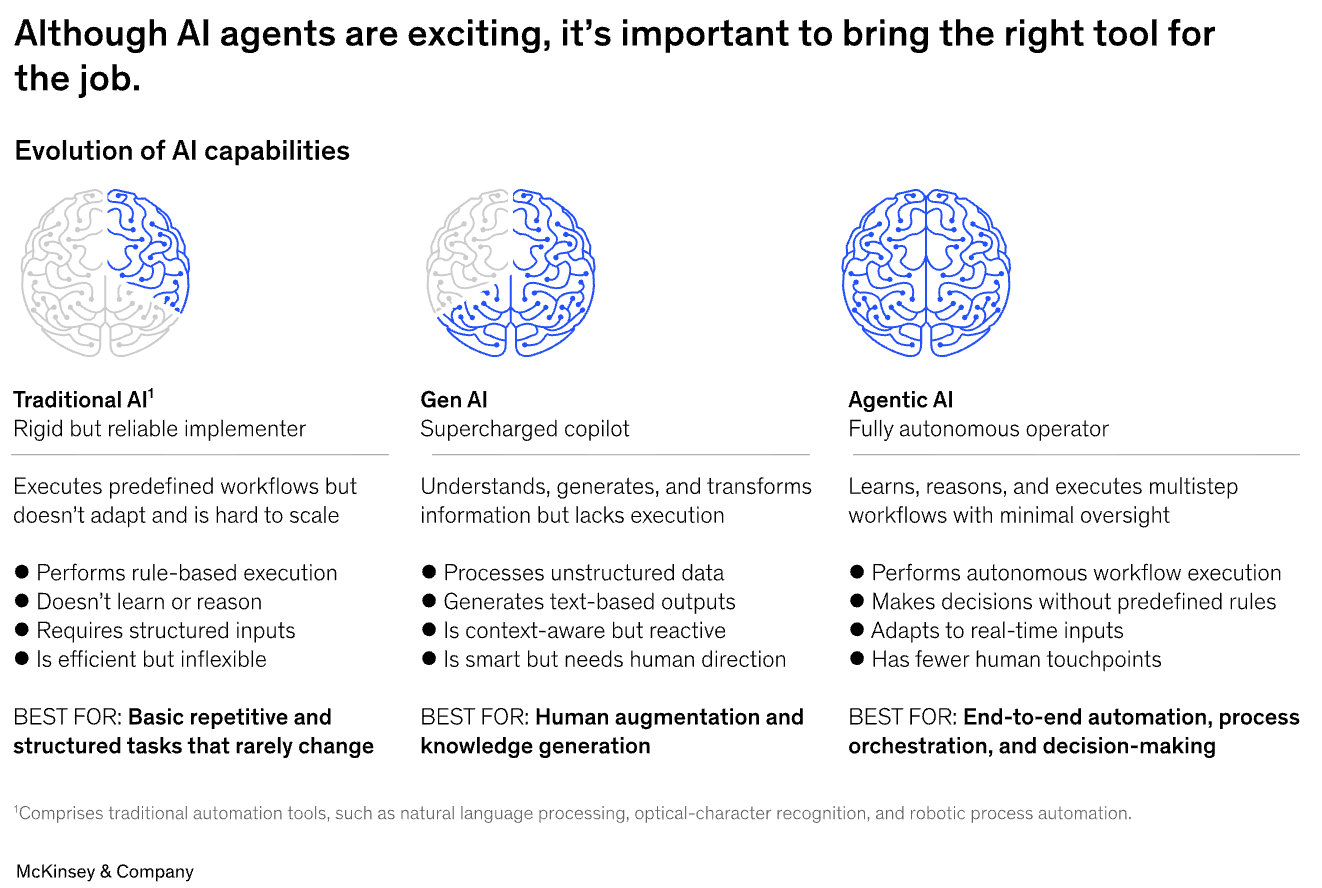

AI agents push open innovation beyond prompting. They navigate complexity, execute workflows, and scale discovery autonomously. Unlike static tools, agents can scan patent flows, evaluate partners, and initiate outreach, all in one loop.

McKinsey calls this shift “agentic AI” and sees it unlocking massive productivity gains in R&D-heavy industries (source). But they need guardrails: agents excel with bounded tasks, solid data, and clear feedback signals.

The result: less friction, more orchestration, and an open innovation system that thinks ahead.

Case snapshots

a2a — AI agents for internal bottlenecks

The Italian utility player a2a started small: a few targeted frictions inside their innovation funnel. Pitch decks sat unread, founder due diligence took days, and trend monitoring felt reactive. So the team built narrow AI agents, each with a focused task: one for structuring pitch materials, one for cap table insights, another for tracking milestone progress across the portfolio. (Source: a2a keynote at Polimi GSoM)

BioPharma — faster discovery through AI-enhanced scouting

One global pharma firm restructured its early-stage discovery program around AI-augmented scouting. Instead of waiting for academic partners to pitch new molecules, they trained an AI model to scan publications, patents, and conference abstracts. It flagged unorthodox signals (combinations of biomarkers and compounds) that the team would have missed using traditional keyword filters.

Human factor and strategic trust

Even as AI links data points and proposes patterns, the human role deepens. As Andrea Durante from Eldor said in a webinar I attended recently: “AI excels at identifying hidden correlations, but the true spark of innovation comes from lateral thinking connecting dots into visionary opportunities.”

Relational trust is another frontier. AI might suggest partnerships, but only people can form the bonds that sustain ecosystems. Strategic collaboration builds on credibility, nuance, and shared risk, elements that no model predicts or codes.

Preserving this human layer is not nostalgic. It’s strategic. It ensures that AI enhances vision, rather than flattening it.

Governance, IP, and risk

AI rewrites the playbook, but without new rules, the game tilts fast. Innovation teams now navigate a web of legal, ethical, and technical tensions:

Strategic flattening from prompt-fed sameness

IP confusion over who owns generated content

Data exposure through oversharing

Open-source risk when foundations mix permissive and protected licenses

Compliance drift as models evolve in production

Here’s a field checklist to stay ahead:

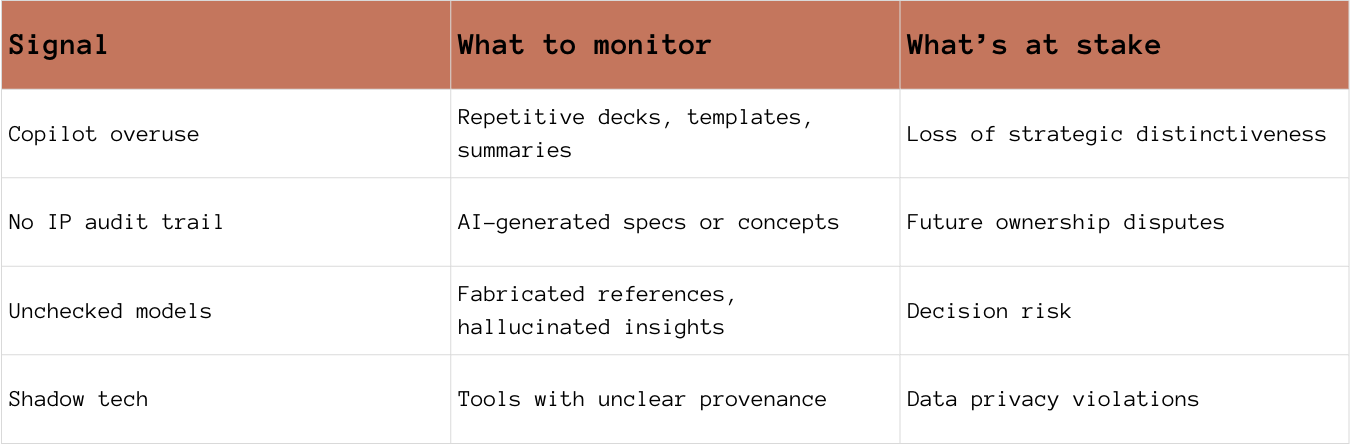

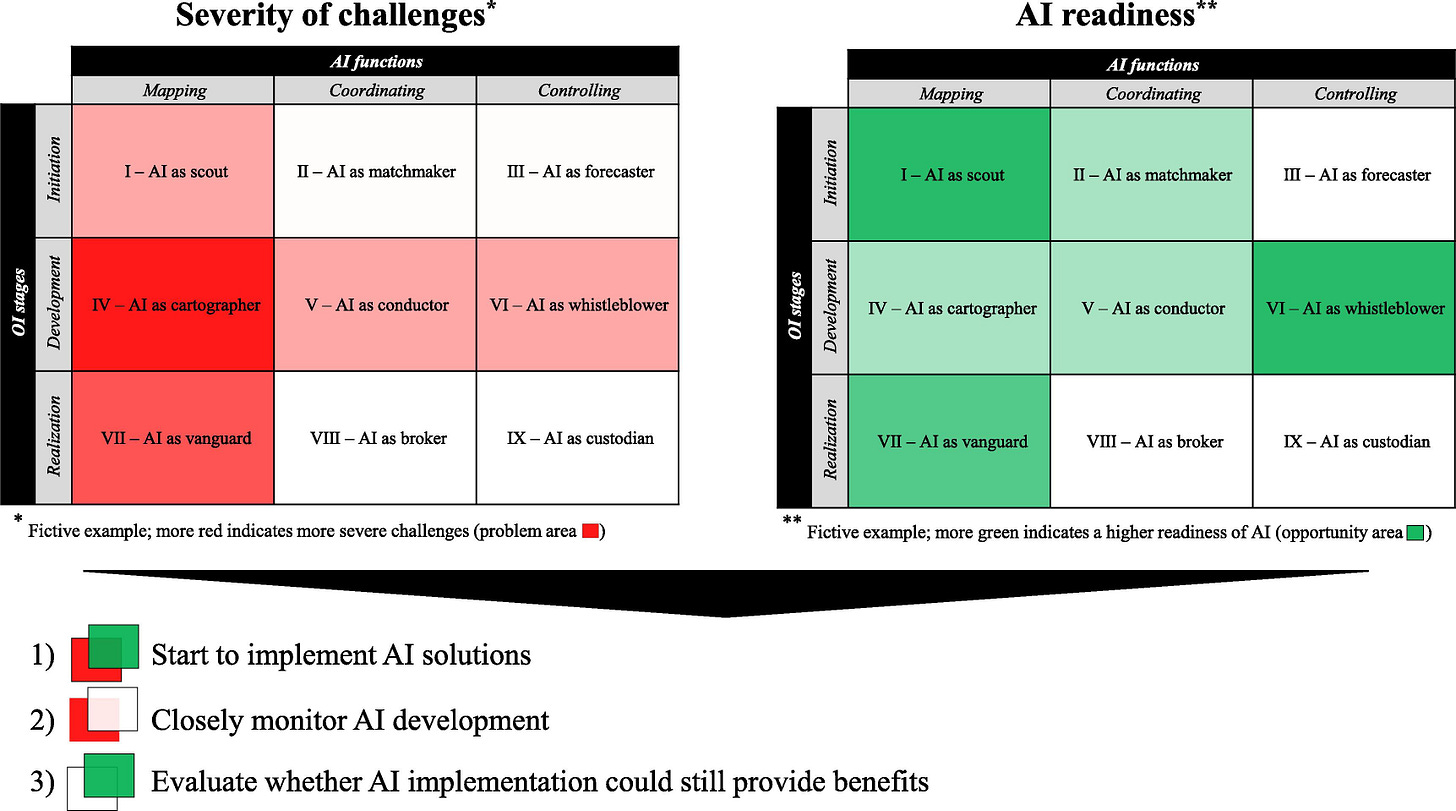

Red flags to watch

Risk mitigations table

Governance here means more than policy. It means productizing trust. And like any product, trust must be designed, tested, versioned, and monitored.

Closing thoughts

AI gives open innovation new surface area. It doesn’t just accelerate what exists, it expands what’s possible. But value flows only when ambition meets structure.

If you lead innovation, ask yourself:

Where in your innovation value chain do you face the most friction, and could automation remove it without adding opacity?

Do you control the data needed to train, fine-tune, or fuel the AI? And if not, are you comfortable letting your partners shape the insight edge?

How will you design the process to keep humans in the loop, not just for control, but to preserve context, creativity, and trust?

Start small. Codify learnings. Then scale with focus.

Let’s hear from you: Where do you see the highest potential (or tension) between AI and open innovation in your org? Hit reply or tag us with your take.

📣 Want to be featured on Open Road Ventures?

If you’re building a tool, service, or promoting an event in the innovation landscape, check out this page to learn how to sponsor a spot in front of 1,400+ engaged innovators. Let’s brew something great together!

As usual, a soundtrack for you: